Perspective Correct Interpolation and Vertex Attributes

Reading time: 14 mins.Interpolating Vertex Attributes

To get a basic rasterizer working, we need to understand how to project triangles onto the screen, convert the projected coordinates to raster space, rasterize triangles, and potentially use a depth-buffer to address the visibility problem. Achieving this allows us to create images of 3D scenes that are both perspective-correct and in which the visibility problem is solved—ensuring objects that should be hidden by others do not appear in front of objects that are supposed to occlude them. This outcome is already significant. The code we have presented for accomplishing these tasks is functional but could be greatly optimized. However, optimizing the rasterization algorithm is not the focus of this lesson.

Perspective Correct Vertex Attribute Interpolation

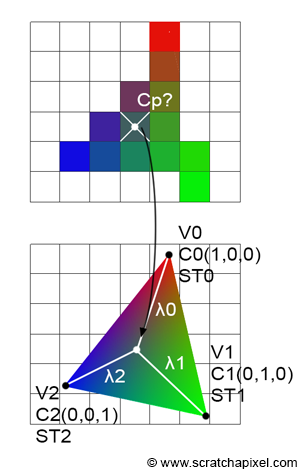

In this chapter, we delve into the use of barycentric coordinates for interpolating vertex data or vertex attributes, commonly referred to in rendering. While barycentric coordinates are essential for interpolating depth values—a primary function—they also enable the interpolation of vertex attributes. Vertex attributes are crucial in rendering, especially concerning lighting and shading. More details on how vertex attributes are applied in shading will be provided as we approach shading in this section. However, a deep understanding of shading is not necessary to grasp the concepts of perspective correct interpolation and vertex attributes.

One might question why we address this topic now rather than in the shading section, given its closer relation to shading. While vertex attributes are indeed more directly related to shading, the discussion on perspective correct interpolation is particularly relevant to rasterization.

It's essential to store the z-coordinates of the original vertices (from camera space) in the z-coordinate of our projected vertices (in screen space). This step is crucial for computing the depth of points across the surface of the projected triangle, a necessity for resolving the visibility problem. Depth calculation involves linearly interpolating the reciprocal of the z-coordinates of the triangle vertices using barycentric coordinates. This interpolation technique can also apply to any variable defined at the triangle's vertices, akin to how we store the original z-coordinates in the projected points. Commonly, triangles' vertices may carry a color. The other two prevalent attributes stored at the vertices are texture coordinates and normals. Texture coordinates, 2D coordinates used for texturing, and normals, which indicate the surface orientation, are vital for texturing and shading, respectively. This lesson will specifically focus on color and texture coordinates to illustrate the concept of perspective-correct interpolation.

As discussed in the chapter on rasterization, colors or other attributes can be specified for the triangle vertices. These attributes can be interpolated across the surface of the triangle using barycentric coordinates to determine their values at any point within the triangle. In essence, vertex attributes must be interpolated across the surface of a triangle during rasterization. The process unfolds as follows:

-

Assign multiple vertex attributes to the triangle's vertices as desired. These attributes are defined on the original 3D triangle in camera space. For illustration, we will assign two vertex attributes: one for color and one for texture coordinates.

-

Project the triangle onto the screen, converting the triangle's vertices from camera space to raster space.

-

In screen space, rasterize the triangle. Compute the barycentric coordinates of a pixel if it overlaps the triangle.

-

Interpolate the colors (or texture coordinates) defined at the triangle's vertices using the computed barycentric coordinates with the formula:

$$C_P = \lambda_0 \cdot C_0 + \lambda_1 \cdot C_1 + \lambda_2 \cdot C_2$$Here, \(\lambda_0\), \(\lambda_1\), and \(\lambda_2\) represent the pixel's barycentric coordinates, and \(C_0\), \(C_1\), and \(C_2\) are the colors at the triangle vertices. The resultant \(C_P\) is then assigned to the current pixel in the frame-buffer. Similarly, this method can compute the texture coordinates of the point on the triangle that the pixel overlaps:

$$ST_P = \lambda_0 \cdot ST_0 + \lambda_1 \cdot ST_1 + \lambda_2 \cdot ST_2$$These coordinates are utilized for texturing (refer to the lesson on Texture Mapping in this section to learn about texture coordinates and texturing).

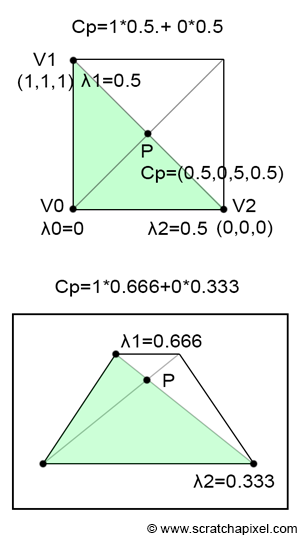

This technique, however, proves to be ineffective. To understand why, let's examine what happens to a point located in the middle of a 3D quad. As depicted in the top view of Figure 2, we have a quad, and point P is clearly in the middle of that quad (P is located at the intersection of the quad's diagonals). However, when we view this quad from a random viewpoint, it is evident that, depending on the quad's orientation relative to the camera, P no longer appears to be at the center of the quad. This discrepancy is due to perspective projection, which, as mentioned previously, preserves lines but does not preserve distances. However, it's important to remember that barycentric coordinates are computed in screen space. Suppose the quad consists of two triangles. In 3D space, P is equidistant from V1 and V2, giving it barycentric coordinates in 3D space of (0, 0.5, 0.5). Yet, in screen space, since P is closer to V1 than to V2, \(\lambda_1\) is greater than \(\lambda_2\) (with \(\lambda_0\) equal to 0). The issue arises because these are the coordinates used to interpolate the triangle's vertex attributes. If V1 is white and V2 is black, then the color at P should be 0.5. However, if \(\lambda_1\) is greater than \(\lambda_2\), we will get a value greater than 0.5, indicating a flaw in our interpolation technique. Assuming, as in Figure 1, that \(\lambda_1\) and \(\lambda_2\) are 0.666 and 0.334 respectively, interpolating the triangle's vertex colors yields:

$$C_P = \lambda_0 \cdot C_0 + \lambda_1 \cdot C_1 + \lambda_2 \cdot C_2 = 0 \cdot C_0 + 0.666 \cdot 1 + 0.334 \cdot 0 = 0.666.$$This results in a color value of 0.666 for P, not the expected 0.5, highlighting a significant issue. This problem relates to the previous chapter's discussion on interpolating the vertex's z-coordinates.

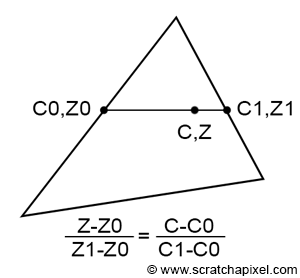

Fortunately, finding the correct solution is straightforward. Imagine we have a triangle with z-coordinates \(Z_0\) and \(Z_1\) on each side, as shown in Figure 3. By connecting these two points, we can interpolate the z-coordinate of a point on this line using linear interpolation. Similarly, we can interpolate between two vertex attribute values, \(C_0\) and \(C_1\), defined at the same positions on the triangle as \(Z_0\) and \(Z_1\), respectively. Since both \(Z\) and \(C\) are computed using linear interpolation, we can establish the following equality (equation 1):

$$\frac{Z - Z_0}{Z_1 - Z_0} = \frac{C - C_0}{C_1 - C_0}.$$Recalling from the last chapter (equation 2):

$$Z = \frac{1}{\frac{1}{Z_0}(1-q) + \frac{1}{Z_1}q}.$$First, we substitute the equation for \(Z\) (equation 2) into the left-hand side of equation 1. The trick to simplifying the resulting equation involves multiplying the numerator and denominator of equation 2 by \(Z_0Z_1\) to eliminate the \(1/Z_0\) and \(1/Z_1\) terms:

$$ \begin{align*} \frac{\frac{1}{\frac{1}{Z_0}(1-q)+\frac{1}{Z_1}q} - Z_0}{Z_1 - Z_0} & = \frac{\frac{Z_0Z_1}{Z_1(1-q)+Z_0q} - Z_0}{Z_1 - Z_0}\\ & = \frac{\frac{Z_0Z_1 - Z_0(Z_1(1-q) + Z_0q)}{Z_1(1-q) + Z_0q}}{Z_1 - Z_0}\\ & = \frac{\frac{Z_0Z_1q - Z_ 0^2q}{Z_1(1-q) + Z_0q}}{Z_1 - Z_0}\\ & = \frac{\frac{Z_0q(Z_1 - Z_0)}{Z_1(1-q) + Z_0q}}{Z_1 - Z_0}\\ & = \frac{Z_0q}{Z_1(1-q) + Z_0q}\\ & = \frac{Z_0q}{q(Z_0 - Z_1) + Z_1}. \end{align*} $$Solving for \(C\) yields:

$$ C = \frac{C_0Z_1(1-q) + C_1Z_0q}{Z_1(1 - q) + Z_0q}. $$Multiplying the numerator and the denominator by \(1/Z_0Z_1\), we can extract a factor of \(Z\) from the right-hand side of the equation:

$$ C = Z \left[ \frac{C_0}{Z_0}(1-q) + \frac{C_1}{Z_1}q \right]. $$This equation is fundamental to rasterization and is used to interpolate vertex attributes, a crucial feature in rendering. It demonstrates that to interpolate a vertex attribute correctly, we first divide the vertex attribute value by the z-coordinate of the vertex it is defined on, linearly interpolate them using \(q\) (the barycentric coordinates of the pixel on the 2D triangle in this context), and finally multiply the result by \(Z\), the depth of the point on the triangle that the pixel overlaps. Here is an updated version of the code from chapter three, illustrating perspective correct vertex attribute interpolation:

To compile, use:

-

For naive vertex attribute interpolation:

c++ -o raster3d raster3d.cpp -

For perspective correct interpolation:

c++ -o raster3d raster3d.cpp -D PERSP_CORRECT

// (c) www.scratchapixel.com

#include <cstdio>

#include <cstdlib>

#include <fstream>

typedef float Vec2[2];

typedef float Vec3[3];

typedef unsigned char Rgb[3];

inline

float edgeFunction(const Vec3 &a, const Vec3 &b, const Vec3 &c) {

return (c[0] - a[0]) * (b[1] - a[1]) - (c[1] - a[1]) * (b[0] - a[0]);

}

int main(int argc, char **argv) {

Vec3 v2 = {-48, -10, 82};

Vec3 v1 = {29, -15, 44};

Vec3 v0 = {13, 34, 114};

Vec3 c2 = {1, 0, 0};

Vec3 c1 = {0, 1, 0};

Vec3 c0 = {0, 0, 1};

const uint32_t w = 512;

const uint32_t h = 512;

// Project triangle onto the screen

v0[0] /= v0[2], v0[1] /= v0[2];

v1[0] /= v1[2], v1[1] /= v1[2];

v2[0] /= v2[2], v2[1] /= v2[2];

// Convert from screen space to NDC then raster (in one go)

v0[0] = (1 + v0[0]) * 0.5 * w, v0[1] = (1 + v0[1]) * 0.5 * h;

v1[0] = (1 + v1[0]) * 0.5 * w, v1[1] = (1 + v1[1]) * 0.5 * h;

v2[0] = (1 + v2[0]) * 0.5 * w, v2[1] = (1 + v2[1]) * 0.5 * h;

#ifdef PERSP_CORRECT

// Divide vertex-attribute by the vertex z-coordinate

c0[0] /= v0[2], c0[1] /= v0[2], c0[2] /= v0[2];

c1[0] /= v1[2], c1[1] /= v1[2], c1[2] /= v1[2];

c2[0] /= v2[2], c2[1] /= v2[2], c2[2] /= v2[2];

// Pre-compute 1 over z

v0[2] = 1 / v0[2], v1[2] = 1 / v1[2], v2[2] = 1 / v2[2];

#endif

Rgb *framebuffer = new Rgb[w * h];

memset(framebuffer, 0, w * h * 3);

float area = edgeFunction(v0, v1, v2);

for (uint32_t j = 0; j < h; ++j) {

for (uint32_t i = 0; i < w; ++i) {

Vec3 p = {i + 0.5, h - j + 0.5, 0};

float w0 = edgeFunction(v1, v2, p);

float w1 = edgeFunction(v2, v0, p);

float w2 = edgeFunction(v0, v1, p);

if (w0 >= 0 && w1 >= 0 && w2 >= 0) {

w0 /= area, w1 /= area, w2 /= area;

float r = w0 * c0[0] + w1 * c1[0] + w2 * c2[0];

float g = w0 * c0[1] + w1 * c1[1] + w2 * c2[1];

float b = w0 * c0[2] + w1 * c1[2] + w2 * c2[2];

#ifdef PERSP_CORRECT

float z = 1 / (w0 * v0[2] + w1 * v1[2] + w2 * v2[2]);

// Multiply the result by

z for perspective-correct interpolation

r *= z, g *= z, b *= z;

#endif

framebuffer[j * w + i][0] = static_cast<unsigned char>(r * 255);

framebuffer[j * w + i][1] = static_cast<unsigned char>(g * 255);

framebuffer[j * w + i][2] = static_cast<unsigned char>(b * 255);

}

}

}

std::ofstream ofs;

ofs.open("./raster2d.ppm");

ofs << "P6\n" << w << " " << h << "\n255\n";

ofs.write(reinterpret_cast<char*>(framebuffer), w * h * 3);

ofs.close();

delete[] framebuffer;

return 0;

}

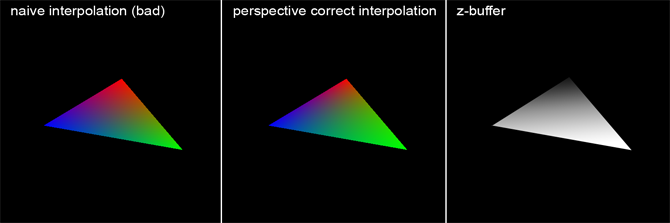

Computing the sample depth requires the use of the reciprocal of the vertex's z-coordinates. For this reason, we pre-compute these values before looping over all pixels (line 52). If perspective correct interpolation is chosen, the vertex attribute values are divided by the z-coordinate of the vertex they are associated with (lines 48-50). The following image illustrates, on the left, an image computed without perspective correct interpolation, in the middle, an image with perspective correct interpolation, and on the right, the content of the z-buffer (displayed as a grayscale image where objects closer to the screen appear brighter). Although the difference is subtle, in the left image, each color seems to roughly fill the same area, due to colors being interpolated within the "space" of the 2D triangle (as if the triangle were a flat surface parallel to the screen). However, examining the triangle vertices (and the depth buffer) reveals that the triangle is not parallel to the screen but is oriented at a certain angle. Because the vertex painted in green is closer to the camera than the other two, this part of the triangle occupies a larger part of the screen, as visible in the middle image (the green area is larger than the blue or red areas). The middle image shows correct interpolation, akin to what would be achieved using a graphics API such as OpenGL or Direct3D.

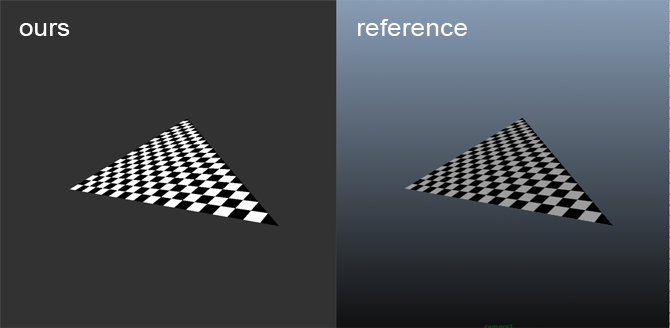

The distinction between correct and incorrect perspective interpolation becomes even more apparent when applied to texturing. In the next example, texture coordinates were assigned to the triangle vertices as vertex attributes, and these coordinates were used to generate a checkerboard pattern on the triangle. The task of rendering the triangle with or without perspective correct interpolation is left as an exercise. The image below demonstrates the result, which also aligns with an image of the same triangle featuring the same pattern rendered in Maya. This outcome suggests our code is performing as intended. Similar to color interpolation, all vertex attributes, including texture coordinates (often denoted as ST coordinates), require division by the z-coordinate of the vertex they are associated with. Later in the code, the interpolated texture coordinate value is multiplied by Z. Here are the modifications made to the code:

// (c) www.scratchapixel.com

#include <cstdio>

#include <cstdlib>

#include <fstream>

#include <cmath> // For fmod function

typedef float Vec2[2];

typedef float Vec3[3];

typedef unsigned char Rgb[3];

inline

float edgeFunction(const Vec3 &a, const Vec3 &b, const Vec3 &c) {

return (c[0] - a[0]) * (b[1] - a[1]) - (c[1] - a[1]) * (b[0] - a[0]);

}

int main(int argc, char **argv) {

Vec3 v2 = {-48, -10, 82};

Vec3 v1 = {29, -15, 44};

Vec3 v0 = {13, 34, 114};

...

// Texture coordinates

Vec2 st2 = {0, 0};

Vec2 st1 = {1, 0};

Vec2 st0 = {0, 1};

...

#ifdef PERSP_CORRECT

// Divide vertex attributes by the vertex's z-coordinate

c0[0] /= v0[2], c0[1] /= v0[2], c0[2] /= v0[2];

c1[0] /= v1[2], c1[1] /= v1[2], c1[2] /= v1[2];

c2[0] /= v2[2], c2[1] /= v2[2], c2[2] /= v2[2];

st0[0] /= v0[2], st0[1] /= v0[2];

st1[0] /= v1[2], st1[1] /= v1[2];

st2[0] /= v2[2], st2[1] /= v2[2];

// Pre-compute the reciprocal of z

v0[2] = 1 / v0[2], v1[2] = 1 / v1[2], v2[2] = 1 / v2[2];

#endif

for (uint32_t j = 0; j < h; ++j) {

for (uint32_t i = 0; i < w; ++i) {

if (w0 >= 0 && w1 >= 0 && w2 >= 0) {

float s = w0 * st0[0] + w1 * st1[0] + w2 * st2[0];

float t = w0 * st0[1] + w1 * st1[1] + w2 * st2[1];

#ifdef PERSP_CORRECT

// Calculate the depth of the point on the 3D triangle that the pixel overlaps

float z = 1 / (w0 * v0[2] + w1 * v1[2] + w2 * v2[2]);

// Multiply the interpolated result by z for perspective-correct interpolation

s *= z, t *= z;

#endif

// Create a checkerboard pattern

float pattern = (fmod(s * M, 1.0) > 0.5) ^ (fmod(t * M, 1.0) < 0.5);

framebuffer[j * w + i][0] = (unsigned char)(pattern * 255);

framebuffer[j * w + i][1] = (unsigned char)(pattern * 255);

framebuffer[j * w + i][2] = (unsigned char)(pattern * 255);

}

}

}

return 0;

}

Here is the result you should expect:

What's Next?

In the final chapter of this lesson, we will discuss ways to enhance the rasterization algorithm. Although we won't delve into implementing these techniques specifically, we will explain how the final code works and how these improvements could theoretically be integrated.